Contexte

I took part in the LANDR adventure, a Montreal-based company creating online tools designed for musicians. Being fascinated by music and its user impact (so fascinated I wrote a conference paper about it), I was able to set up many user research projects. As the only UX Researcher there, it felt like a playground! 🎶

So for one of my first projects, I led the research effort to improve the knowledge of the user experience on LANDR's most important product: mastering via Artificial Intelligence.

Role: Carried out the entire UX research, in collaboration with David Magère & Karim Dahou (Product Designers), Marc-André Veronneau (Head of Product Design), Louis Thompson-Amadei (Product Marketing Manager), and Patrick Bourget (Product Director)

Methodology: Questionnaire, user testing

Tools: Typeform, Figma, Notion, Google Meet

Duration: About two months

Meet The Mastering tool

To understand the project, you have to understand the mastering tool first.

To summarise very roughly, mastering is the process by which we rework the different parts of a song (drums, vocals, bass, etc.) to prepare it for distribution on platforms. The tricky goal here is to make it sound just as good whether you listen to it on Spotify in your car or on your Apple smartphone!

This mastering process, usually done by an engineer specialised in the field, can be expensive and time-consuming. It is by offering a similar service, done via artificial intelligence for a fraction of the cost and time, that LANDR has become known in the music industry. And it is on this incredible tool that I had the chance to start with LANDR!

So I decided to bring to the product team the most relevant answers to this question by proposing a double approach: user tests with people who didn't know the automated mastering service and a survey for the current users of the service.

Mixed methods

But why then do a dual study with both users and prospects? Firstly, because the research scope was so huge, it was necessary to separate these two groups who do not have the same experience with the product. The topics and issues addressed by each would be too different. And secondly, because the product team did not have any preliminary qualitative or quantitative information on these topics, I took the initiative to provide some to improve their understanding.

For people new to AI mastering, what was their understanding of the service? What elements of the user flow were a source of frustration or confusion?

For people currently using the service, what was their opinion of the service? What were their pain points? What would be the most relevant features to prioritise?

So I recruited 7 participants close to our personas for the qualitative part of this study and solicited more than 850 participants for the quantitative part. And the easiest thing to do was not necessarily the one you might think...!

User Tests

For the user tests, I simply used the live version of the site and asked the participants to go along the classic path from registration to the final checkout page. This allowed me to get the closest possible feedback to the “real first time user experience”, the only thing that counts in UX research! The most "biased" variable in that research was actually the presence of the Researcher (me :) ). It would have been interesting to perform these tests in a non-moderated setting, to remove the bias of direct observation as well. But because of time and money constraints, doing the user tests on Google Meet was the best answer to the team's needs.

The results of this part of the study were globally positive: all users managed to master their sound without any hiccup and reach the pricing page! 🎉 However, many elements were flagged as problematic in the sign-up path and the pricing page, particularly in the copy which would benefit from being clearer.

For example, several users knew the "traditional" mastering process, but not the one via artificial intelligence...They then assumed that the mastering process would take several hours or days when it only took a few minutes in reality! This was mostly due to a complete lack of reference provided to prospects, who then had no idea other than their existing mental model to judge this new product.

Survey

For the questionnaire, I managed to solicit our user base and submit a Typeform link previously validated by the marketing team. Indeed, I've noticed through my various experiences that marketing and UX teams have methods in common even if their objective is in the end different.

So this was a perfect opportunity to collaborate and brainstorm to come up with questions relevant to everyone. It also reduces the load of emails sent to our nice users and increases the response rate!

The answers to this questionnaire provided information on the current state of the tool's perception, helped prioritise the roadmap (which was directly impacted by these tests), and also provided unexpected insights into the use of the tool.

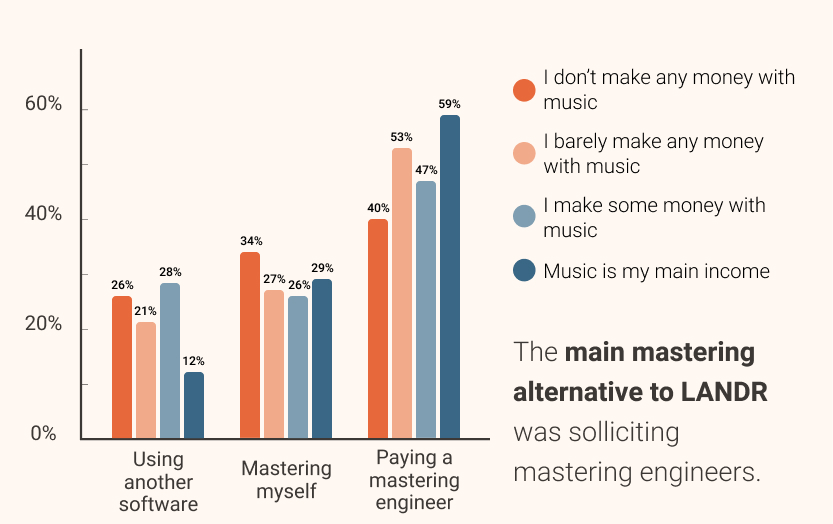

One of the most curious insights for example was to learn that the most frequent alternative to LANDR's automated mastering was not another competing mastering service, but using a sound engineer, followed closely by the respondents doing the mastering themselves. And this was regardless of their income ! This information can have a tremendous impact on communication: one would not have the same arguments whether one wants to convince a user to leave a competitor or to switch from human help to artificial intelligence...

Conclusion

I obviously can't share all the feedback I observed, nevertheless it was interesting enough that I made a presentation to the whole company to share it. Not bad for a first UXR project within a company :)

This will remain one of my highlights at LANDR, as it allowed me to implement a mixed methodology and help define the right questions with the right people. Presenting to the whole of LANDR was also a perfect evangelisation exercise and allowed me to communicate the benefits of systematic UX research in a short time, even on existing products.

If I could have, I would have coupled this process with a prototype test on upcoming features. But again, time constraints didn't allow me to do that here. So another time!

Thanks for reading! Want to read more cases?